CREAM: the scary SSL attack you’ve probably never heard of

2014 was a year packed full of the discovery of new SSL† attacks. First we found Java was vulnerable to a new type of “Bleichenbacher” attack. Apple’s SecureTransport, used by both iOS and OS X, went down next with the “goto fail” vulnerability. GNUTLS was vulnerable to a man-in-the-middle attack. OpenSSL perhaps came out as the most notorious with the Heartbleed attack. The NSS library, used by Chrome and Firefox among others, was vulnerable to yet another Bleichenbacher attack known as BERserk. The Microsoft SChannel library used by Windows was vulnerable to a particularly scary remote code execution vulnerability. At least two protocol-level vulnerabilities in SSL were widely circulated: the triple-handshake attack and POODLE. And we still have over a month left in the year!

While 2014 is a notable outlier in terms of the sheer number of attacks discovered and the publicity they’ve received, these sort of attacks are nothing new. The 2002 “openssl-too-open” attack allowed remote code execution attacks against OpenSSL, making it worse than Heartbleed (but on par with the recent SChannel attack). However, it happened at a time when the Internet was less essential for most people’s day-to-day lives, so perhaps it’s little more than a historical footnote at this point.

Okay, so I’ve just named off a ton of attacks, and your eyes might be glazing over. But you might be wondering why I haven’t even mentioned CREAM yet. Like “openssl-too-open”, CREAM is an old OpenSSL attack dating back to 2005. But where the main takeaway from attacks like “openssl-too-open” are probably “C is too dangerous to use for writing libraries like OpenSSL”, CREAM is an attack that has had a profound effect on cryptography to the point that many of cryptography’s practitioners spend much of their time worrying about its ramifications.

If you dabble in cryptography, you may have heard of cache timing attacks, but haven’t specifically heard of CREAM. What is CREAM and why is it so bad?

CREAM is a cache timing attack that was used against OpenSSL’s implementation of AES. It allows an attacker on one computer to extract AES keys from another computer over a network. The attack works by measuring round trip timings of known plaintexts encrypted under AES by OpenSSL running on the victim’s computer.

That’s right: simply by measuring minute timing discrepancies over a network, an attacker could extract AES keys from another computer, making it almost as severe as Heartbleed. These timing discrepancies occurred because AES uses a design element known as an S-box, which is effectively a table whose elements we look up based on the AES key. Unfortunately, CPUs are extremely eager to optimize these sorts of lookups with caches, and because the lookups are ultimately based on the key, they introduce what’s known as a side-channel.

Now’s the part in the blog post where I admit the title is clickbait, but hey, I learn from the best. This attack has not been previously branded as CREAM before, but if Heartbleed (or BEAST, CRIME, BREACH, and POODLE among others) is any lesson, one of the best ways to raise awareness about an attack is to give it a silly name. Therefore, I am (un)officially branding this particular attack as Cache Rules Everything Around Me.

How can we avoid getting CREAMed? There’s only one option: we must close this side-channel and ensure any cryptographic operations we perform are constant-time. The enemy here is something known as a data-dependent timing: these are things like branching on secrets or doing address lookups based on secrets. Unfortunately, the way AES was designed, one of the main things we’d like to do is a table lookup to implement AES S-boxes. This makes it difficult to implement AES correctly in pure software.

Fortunately, Intel solved this problem… for the hyperspecific case of AES. Newer Intel CPUs (and also other vendors including ARM) now provide a fast, constant-time implementation of AES in hardware. That’s great for AES, but there’s a more general lesson to be drawn from CREAM.

Ideally, ciphers simply wouldn’t contain elements like S-boxes that are difficult to implement in constant time. Instead, ciphers would be designed with mechanical sympathy in mind and be entirely composed of operations that are easy (or at least easier) to implement in constant time. And indeed, many modern ciphers, such as ChaCha20, are designed with this principle in mind.

However, good cipher design alone isn’t enough, and what should we do when we have to implement a cipher like AES in software? This is where things get rather tricky. Whenever someone with a crypto background gets a bit short about not rolling your own crypto, attacks like this are why.

First, there are rules we can follow to avoid timings that are dependent on secret data. The cryptocoding.net coding rules describe some steps we can take to avoid these problems (in addition to some more general advice):

- Compare secret strings in constant time

- Avoid branchings controlled by secret data

- Avoid table look-ups indexed by secret data

- Avoid secret-dependent loop bounds

- Prevent compiler interference with security-critical operations

- Prevent confusion between secure and insecure APIs

- Avoid mixing security and abstraction levels of cryptographic primitives in the same API layer

- Use unsigned bytes to represent binary data

- Use separate types for secret and non-secret information

- Use separate types for different types of information

- Clean memory of secret data

- Use strong randomness

Okay great, we have a set of rules to follow, but is that enough? While rules are good guidelines, that’s all they are. Even if we try to follow them, what if we make mistakes? What if the CPU behaves in a way that we don’t expect? It would seem trying hard to follow the rules is necessary but not sufficient to implement software which is truly constant-time.

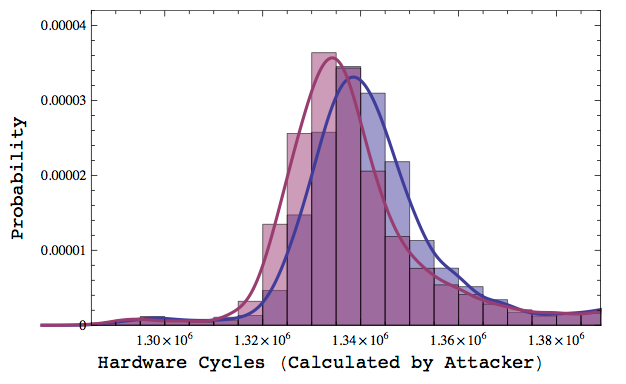

The next thing we need to do is measure. We can better understand the behavior of a particular CPU by measuring it empirically. However, where we might traditionally measure on the granularity of milliseconds or microseconds, for cryptography our measurements need to be more precise. Modern timing attacks combine a large number of samples with statistical analysis to extract even a tiny bit of signal from an otherwise noisy system. The image at the top of this post is taken from the Lucky 13 Attack which was able to discern timing variability of as little as a microsecond measured over a noisy network full of random delays and jitter.

To measure with that degree of precision, we need to use the CPU cycle counters that are built into modern CPUs, such as the Time Stamp Counter (TSC) on Intel CPUs. These counters can give us a much more precise picture of what’s happening than the typical wall clock measurements you might be familiar with. How precise? On a 1GHz CPU, each cycle takes a nanosecond. Since modern CPUs are typically over 1GHz, a CPU cycle takes less than one nanosecond. If we’ve implemented a cipher correctly, then each time we use it, no matter what the values of the inputs are, it should always run in a precise number of clock cycles.

Dan Bernstein, the cryptographer who originally created the “CREAM” attack, produced a set of images that visualize timing variability in various cryptographic implementations when measured at the level of individual CPU cycles. Ideally, in these images, we would see a uniform grid, revealing no information:

Unfortunately, when measured this way, OpenSSL’s AES implementation was quite a bit less uniform-looking:

Until we actually measure, it’s difficult to know if an implementation is actually constant time, and even then our measurements only apply to the microarchitecture of the CPU we measured on. If we’re trying to write portable code, we might discover that on a certain platform the compiler has discovered a “weird trick” which would normally make a program more efficient, but in a cryptographic context leaks information. To measure effectively, we need to do it on a wide range of CPUs.

So finally, how can we avoid those problems? Depending on the context, we might just have to knuckle down and implement some CPU-specific assembly. For example, the Galois Counter Mode (GCM), a popular AES “mode of operation” (and the one you should probably be using if you use AES), relies on something known as finite field multiplication which is difficult to implement in constant time on Intel CPUs. Fortunately, Intel added a set of CPU instructions known as CLMUL which can be used to implement GCM in both a fast and constant time manner.

And there we have it: if we want to stand any chance of a cryptographic implementation not getting CREAMed in the future, we need to follow the coding rules, measure our implementations on a wide variety of architectures, and use constant-time assembly implementations of certain primitives when needed.

Unless you do all of these things, watch out: you might get CREAMed.

†SSL has technically been been renamed to Transport Layer Encryption (TLS) by the people who standardized it, despite the fact that it actually operates on the layers above the transport layer in the OSI network model. Not only did the people behind TLS confuse everyone by renaming it, but the new name inaccurately describes what the protocol does. What a mess.