The cloud isn’t dead. It just needs to evolve

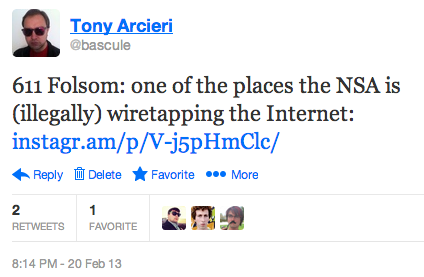

At my previous job my daily commute took me past 611 Folsom Street in San Francisco. This building is infamous for being the home of Room 641A, where a whistleblower, Mark Klein, revealed that the NSA had created a secret room and placed beam splitters on fiber optic cables carrying Internet backbone traffic.

I heard Jacob Appelbaum mention this building’s street addess in his 29c3 keynote, along with the NSA’s Utah Datacenter, where wiretapped traffic from this room was allegedly funneled and stored, a challenge that sounds daunting but according to NSA whistleblower William Binney, the datacenter has a “yottabyte” scale capacity intended to archive all the traffic they can collect for up to 100 years. From then on I got a little bit preoccupied with this building every time I walked past it on my daily commute.

It’s a building I took pictures of and tweeted about:, like this picture from February:

The idea of an adversary eavesdropping your traffic is central to cryptography. Cryptographers generally bestow the name “Eve” on this adversary, although the EFF has recently abandoned this pretense and has started producing diagrams which identify this adversary as the NSA. For the sort of beam-splitter based observe-but-do-not-interfere style traffic snooping the NSA is performing, the NSA is pretty much acting as the textbook example of “Eve”. However, given the vast capacity of the Utah Data Center, the NSA has the ability to be an incredibly patient Eve, and can leverage all sorts of things like future key compromises and even future algorithm compromises (on a 100 year timespan) to break older traffic dumps.

I’m the sort of person who spends far too much of my day aimlessly thinking about cryptography, and walking past 611 Folsom Street every day it couldn’t help but set my thoughts towards how to defeat the NSA with better cryptography.

The idea of trying to out-crypto a state level adversary with seemingly boundless funding, resources, and expert personnel on their hands might seem a little absurd, and it should be. For an expert look at the problem of whether we can defeat a state-level adversary with cryptographic applications, we can look to Matt Green’s blog post Here come the encryption apps!. Matt does a “Should I use this to fight my oppressive regime?” evaluation of several cryptographic applications and determines that only one of them, RedPhone by Moxie Marlinspike’s Whisper Systems, fits the bill.

Securing our Internet traffic from an “Eve” like the NSA is a daunting challenge. But it’s one I feel it’s worth working on…

Trust No One #

“The Cloud” as a concept has somewhat… fluffy security properties (please pardon the pun). We can encrypt data in the cloud, but encryption in and of itself isn’t particularly helpful. It’s particularly problematic if we trust the same people to store our personal data encrypted and also trust them to hold the encryption keys to that data. If they’re doing both, they can decrypt our personal ciphertexts on a whim.

Matt Green defines a “mud puddle test” for cloud services: let’s say you slip in a mud puddle, which destroys both the brain cells which store a password to your data, and a backup of your password you kept on your phone when the water seeps in and fries its circuit board. Can you still access your data somehow? If so, congratulations, you have failed the mud puddle test.

What’s the problem? In order to get access to your data again, someone else must’ve held onto your key, and you are therefore trusting that someone with the security of your data. Many services today might encrypt your data server-side using a key they know (and perhaps only they know!). Your data may reach their service encrypted over SSL, but they’re terminating the SSL on their end and are able to see all of your plaintext as it passes through their servers. If a state level adversary like the NSA has wormed their way in and requested a backdoor, then your cloud provider is able to tell the NSA anything they want.

The solution to this problem is to encrypt everything client side using a key which is only known to the owner of the data. Before the data ever leaves a single computer, it needs to be encrypted with a key known only by the data’s owner. None of this “decrypt it server-side then re-encrypt it” business will do, nor will storing unencrypted keys with a third party. If you want to ensure your data remains confidential, it must be encrypted with a key known only to you.

Without the guarantee that you are the one and only one holder of the encryption key to a particular piece of data, you have absolutely no assurances that your data is being kept confidential, and that it is not being observed by the NSA, or for that matter… the entire rest of the world.

Secure Cloud Storage #

What does “the Cloud” really mean? Who knows. But as far as I can tell, one of the things it does is store data: your personal data, others’ personal data, businesses’ data. Sensitive data of all sorts. How much of this is stored in plaintext, or with keys held directly by the cloud provider?

We are at the whims of whatever cloud services we use when it comes to how our data is stored, but when you understand the “mud puddle test”, it becomes quite clear that where your data is stored is irrelevant, because if you encrypt data before putting it in the cloud you hold all the keys and your data is protected by cryptography. Any country-specific government snooping should be irrelevant if you can trust cryptography to keep your data secure. There shouldn’t be any worries in trusting nodes in Geneva, San Francisco, Moscow, Beijing, or Tehran. We should be able to rely on cryptography to keep our data secure.

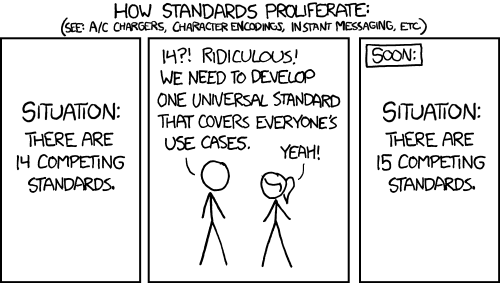

You’d think by now distributed secret storage and sharing would be a solved problem. Unfortunately no one system for generally solving this problem has ever gained traction. This is something I have personally been longing for since I was a high school student playing around with [MojoNation](http://en.wikipedia.org/wiki/Mnet_(peer-to-peer_network), and I would later discover the concept existed much earlier in Project Xanadu, which dates back to the 1960s. Some of the 17 rules of Project Xanadu seem relevant to me today:

- Every Xanadu server is uniquely and securely identified.

- Every Xanadu server can be operated independently or in a network.

- Every user is uniquely and securely identified.

- Every user can search, retrieve, create and store documents.

- Every document can consist of any number of parts each of which may be of any data type.

- Every document can contain links of any type including virtual copies (“transclusions”) to any other document in the system accessible to its owner.

- Links are visible and can be followed from all endpoints.

- Permission to link to a document is explicitly granted by the act of publication.

- Every document can contain a royalty mechanism at any desired degree of granularity to ensure payment on any portion accessed, including virtual copies (“transclusions”) of all or part of the document.

- Every document is uniquely and securely identified.

- Every document can have secure access controls.

- Every document can be rapidly searched, stored and retrieved without user knowledge of where it is physically stored.

- Every document is automatically moved to physical storage appropriate to its frequency of access from any given location.

- Every document is automatically stored redundantly to maintain availability even in case of a disaster.

- Every Xanadu service provider can charge their users at any rate they choose for the storage, retrieval and publishing of documents.

- Every transaction is secure and auditable only by the parties to that transaction.

- The Xanadu client-server communication protocol is an openly published standard. Third-party software development and integration is encouraged.

These are some rather lofty goals, hardly any of which are met by the hypertext system we now use today instead of Xanadu: the web. I believe Xanadu’s original goals are worth revisiting, and that creating a world-scale content addressable storage system which is secure, encrypted, and robust is worth pursuing.

Who is working on this problem? #

Perhaps Xanadu’s scope was too vast, and while it’s goals were admirable, its complexity doomed it to vaporware. Indeed, Xanadu may be the biggest vaporware project in history. There are, however, many people working on limited subsets of the Xanadu problem, attempting to build secure distributed document storage systems. Here are some of the ones I find interesting:

- Tahoe-LAFS: my favorite on the list, Tahoe applies a capability-based security model to the problem of cloud storage, permitting the ability to provide extremely granular access on a read-write, read, or verify level to individual files, directories, and subtrees in a distributed filesystem. I recently contributed some UI improvements to Tahoe and would strongly suggest you check it out. Unfortunately, Tahoe has failed to garner any sort of mainstream traction.

- Freenet: a perpetual contender in this space, FreeNet has somewhat similar goals to Tahoe, but on a global scale. Unfortunately, FreeNet has failed to solve problems around accounting, and generally has problems around performance and reliability.

- Perfect Dark approach towards frustrating those who would try to undermine its crypto. It boasts a number of impressive features such as mixnets for improving anonymity, but without the ability to audit its source code it’s questionable as to how cryptographically secure it actually is.

- The Cryptosphere: my own personal vaporware solution to this problem. It’s received a little bit of coverage on TechCrunch despite being largely vaporware, but in the wake of the recent NSA scandals finally dragging their activities into the spotlight of the public consciousness, I have newfound motivation to continue working on it.

Why create something new? #

I’ll admit that, to a certain degree, the Cryptosphere feels like reinventing the wheel. There are several projects out there that more or less do most of the things I’d like for the Cryptosphere to do. So why bother?

I’d like to take a different approach with the Cryptosphere, one which to my knowledge hasn’t been tried yet, in hopes that I can perhaps produce something meaningful to your average person. I feel like the problem with existing P2P systems like I mentioned above is that their value proposition is difficult for your average person to understand. That was certainly the case for the last P2P system I tried to write.

I’d like to try to build the secure web from the top down. If you look at the existing codebase (which is, admittedly, rather small at this point) this isn’t really what I’ve been doing, as I’ve been spiking out the primitives for encrypted storage. Once these are in place though, I’d like to build a system whose frontend is a web browser, but whose backend runs locally on the same machine, performing encryption and P2P activities.

The end result, in my mind, is a system that allows people to write secure HTML/JS applications able to tap into a rich cryptographic backend running locally on the same machine, a backend which could hopefully provide many of the things people presently run their own servers for today. This is an idea born out of Strobe, a startup I used to work for: with the right set of canned backend services and a way to securely deploy and manage HTML/JS applications, you can author an application which lives entirely in the browser.

It’s ambitious, but it’s an idea I have been thinking about for quite some time. If this sounds interesting to you, please join the Google Group or follow us on Twitter.